Artificial Intelligence... is really just "Someone Else's Intelligence"

Someone else's writing, painting, coding, speaking... someone else's work.

Sometimes, in computing, we give a name to an idea… that is completely misleading.

One of my favorite examples is “The Cloud”. We store files in “The Cloud”. We run services in “The Cloud”. Cloud, cloud, cloud.

Well. What, pray tell, is… “The Cloud”?

Simple. “The Cloud” is nothing more than a vague way to refer to “computers connected to the Internet”.

There is no “Cloud”. Not really. We talk it about it as if it were some magical thing that exists — in some unspecified location — that uses Star Maths and Wishy Thinking to handle all of our Internet-y needs. But it’s not.

When someone says “Save this file to The Cloud”, what they are really saying is “Save this file to a specific computer server”.

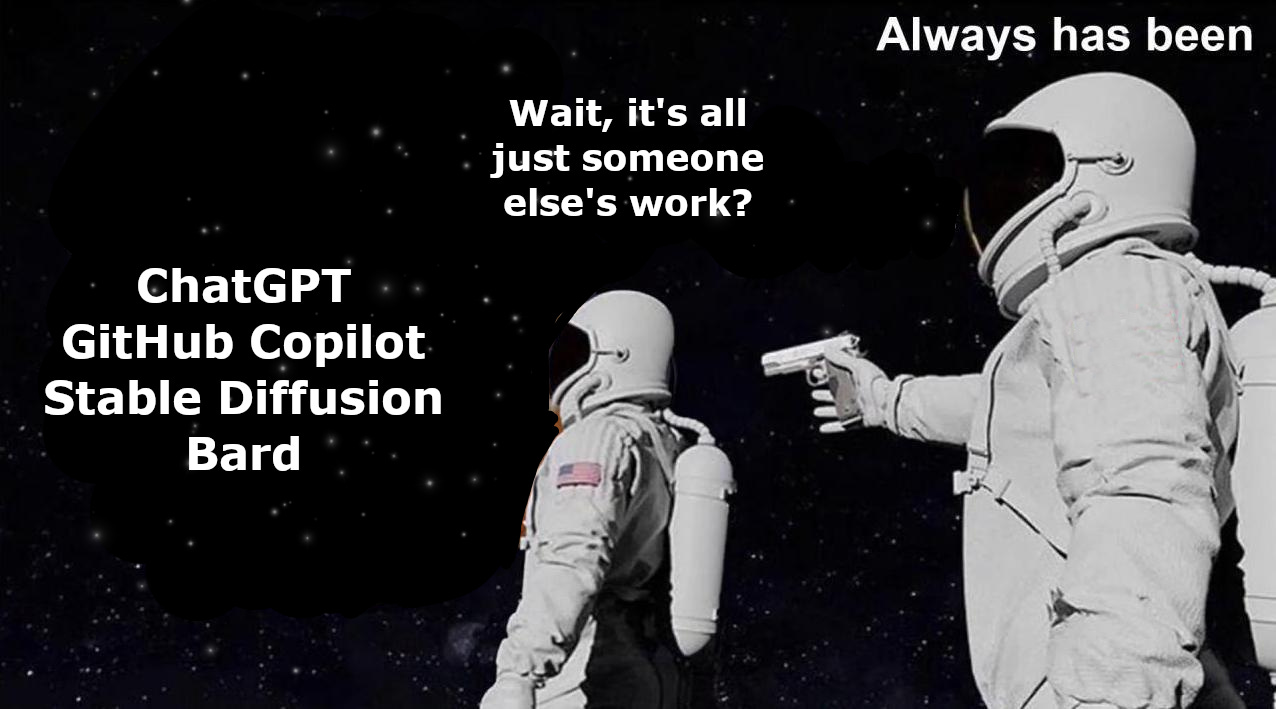

The phrase “There is no Cloud… there is only someone else’s computer” has become a common, amusing refrain in nerdy circles.

You keep using that word…

The same thing is now happening with “Artificial Intelligence” — a term that is profoundly misleading.

Services like ChatGPT, GitHub Copilot, and others are making waves with their “A.I.” and gaining a huge base of users (and media attention) in the process.

People are using ChatGPT to create everything from essays to answers to questions on history tests. GitHub is providing people with huge amounts of code for a wide variety of software projects.

But how, exactly, are these “A.I.” systems creating all of this?

By using keyword searching and pattern recognition software… to find some pre-existing bit of information in its vast database… and provide it to you. A database filled with word definitions, code, pictures, facts, poetry, newspaper articles, tweets, and every other nugget of information the A.I. can get its hands on.

The acquiring of this data is called “training”. The companies which run these A.I. services “train” their A.I. by filling the database up with data from all across the Internet.

And who, pray tell, made all of that “training data” in the first place?

People. Like you and me.

Writers, musicians, painters, students, politicians, programmers… and even people posting their thoughts on Social Media.

What does that mean, exactly?

A.I. is Biff Tannen

It means that what we currently call “Artificial Intelligence” is a piece of software which copies the work of people — of all of us — and passes it off as its own.

“A.I.” is the kid who didn’t prepare for a test… so he copied the answers off you. Seriously.

That is exactly what is happening when you ask ChatGPT or GitHub Copilot for something. It spits back at you something that someone else created.

And it’s quite clever about it. It uses its vast database of code, words, etc. to tweak and alter the text it returns so that it appears, at first glance, to be original work.

ChatGPT is a school bully… demanding that the nerd do all of the Bully’s homework for him… then re-writing the homework himself so that it was in the Bully’s handwriting. Thus fooling the teacher (hopefully).

Just like what Biff Tannen did to George McFly in Back to the Future.

The nerd, in this scenario does not get credit for his work. The kid getting his test copied, likewise, does not get credit.

And neither do you. A.I. copies your work — your social posts, articles, code, etc. — and passes it off as its own.

Artificial Intelligence is misleading

Is A.I. “artificial”? Well. Sorta.

Is A.I. “intelligence”? I mean. Kinda? I guess?

In the same sense that Biff Tannen copying of George McFly could also be called “a student doing his homework”.

Calling the current round of such systems “A.I.” is, at best, misleading. They are highly sophisticated — impressively so — cheating systems. Nothing more.

Unfortunately these “Cheating Systems” — these “Biff Tannens” — are having a truly massive (and devastating) impact on the world.

The end result

As the usage of such “A.I.” systems increases — and people become increasingly reliant upon them — a few things are going to happen (and are already happening):

Original authors are not credited for their hard work (in any way).

Original work — by humans — is devalued, causing multiple catastrophic problems:

Creators (writers, painters, etc.) are paid less and employed less.

Less original, quality, interesting work is created.

People get more dumberer.

The more sophisticated the A.I. software gets… the better it will be at pretending to have original ideas. The better it will get at copying your homework and fooling the teacher. At cheating.

And it’s already incredibly good at it.

The cheaters will copy the cheaters

Let us end on something fun (and horrifying) to think about:

What happens as the total amount of content (articles, posts, etc.) written by these A.I. systems continues to increase?

And then those “A.I. creations” are fed back into the A.I. databases? A.I. will be “trained” (*cough*) on what other A.I. software (and itself) have already copied from others.

That cycle will continue, with an increasingly rapid pace. Every error, misquote, and false statement will be recycled back into the system and it’s impact will be amplified.

The truth is… I am not sure what that looks like as time goes on. But, I’ll tell you this right now… it’s not awesome.

Want to make sure you get every article and podcast? Be sure to be a subscriber to The Lunduke Journal of Technology.

So many reasons to subscribe to The Lunduke Journal of Technology. Nerdy articles & podcasts every week. Plus…

A dozen eBooks — Monthly PDF Magazine — Premium Videos

Other handy links:

The other day I thought to use ChatGPT to get me started on how to set up a connection to quassel-core in rust. It came up with a fully fantastic binding that does not exist at all. A while back I asked it to do a multiplication in Z80 assembly and it used a MUL instruction from some other CPU. I'm thinking about setting up bogus projects with this code to feed it right back into it.

AI is the Uroboros of unintelligible nonsense.